I will use the following command to display the values

Code: Select all

/c game.player.print(game.map_settings.enemy_expansion.min_expansion_cooldown)

/c game.player.print(game.map_settings.enemy_expansion.max_expansion_cooldown)

Result in game is the expected values

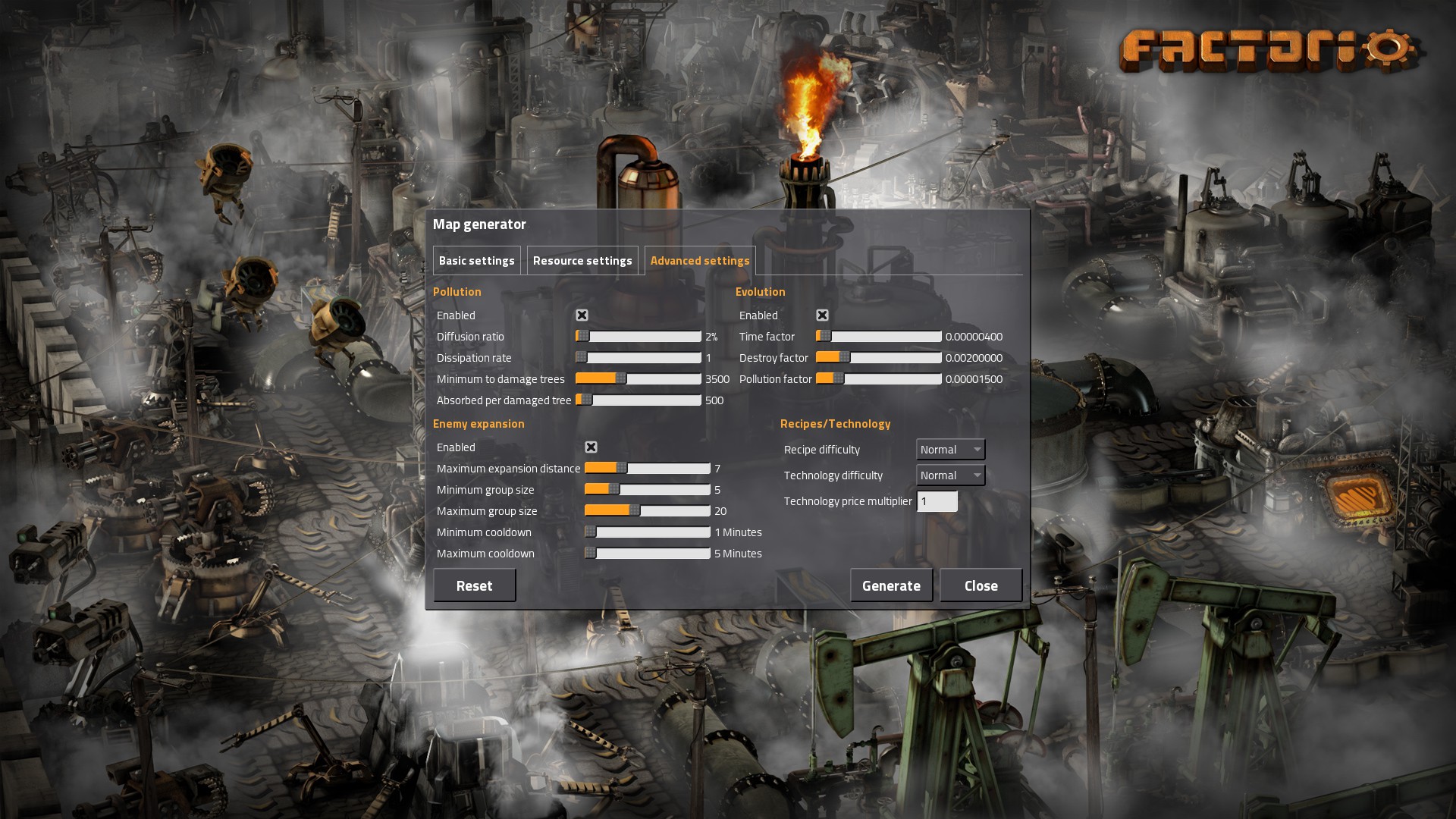

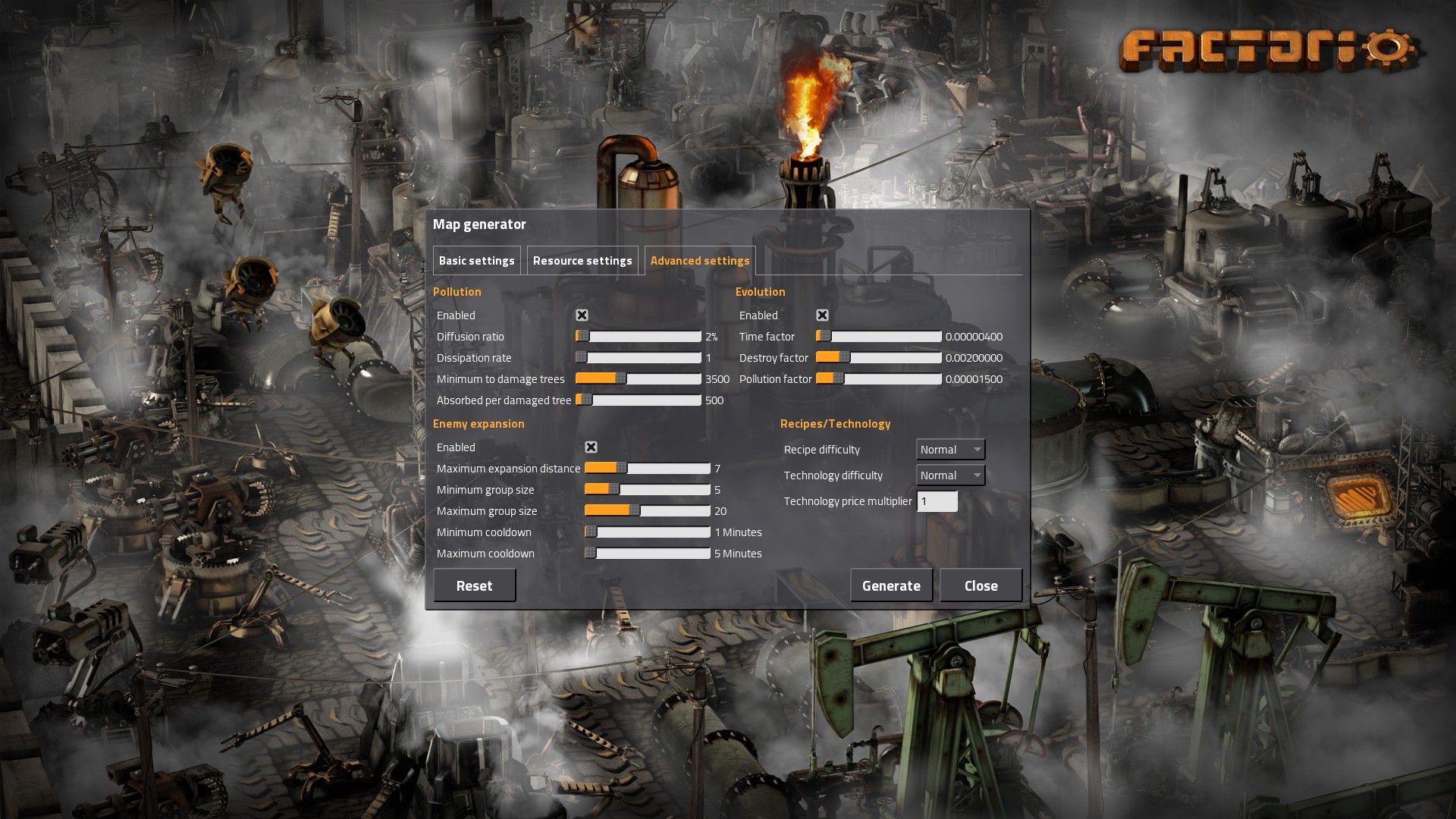

In this next set you can clearly see the Minimum cooldown slider slightly moved to the right but it still shows 1 minute. The Maximum cooldown sldier is harder to micro adjust and have similar results due to the larger span of values. However if you try on other values you can get unexpected values ingame. I was just going for clear visual evidence.

Results ingame

As you can see in the last image the value for minimum expansion is way off what should be expected. Originally I thought it was some sort of fps/tick calculation on the fly. However it seems to me now that the GUI has decimal values that it is not rounding off or handling properly. Which results in a discrepancy of values in game.

I mean honestly is it a big deal.....probably not. So I don't know if you want to fix it or not, but it could cause some issues later with something that may want or need something far more precise. The only reason I even found it is that I was trying to figure out what the values were in the config file. Then I finally realized that the values are ticks per second. That is why I noticed the discrepancy when outputting the values when using the slider from the gui.

Which I will mention again a comment in the config files about the fact is the expected values are to be in ticks per second (60*60 = 3600) would probably help a lot of other people.....which going back you mention for pollution which I overlooked because I wasn't looking to change that....oh well anyways