Klonan wrote:maccs wrote:This new system could also adapt to changing needs. If there is a sudden need for double the items in the same time the player wouldn't have to tweak every request, but the network would notice this and would assign more robots on the job.

This might be possible with combinators in 0.15

This is a lot of what I think makes the lognet so cool. Also -- this is possible with combinators now.

Though I haven't totally learned circuit network stuff yet and so it's 'technically' theoretical. But essentially, we can say:

1) Logistic network architecture is *roughly* analogous to the client server model of the internet. Made up of hosts which fall into two categories: requests come from clients and are filled by providers (servers); data (in this case resources) are carried in anonymous 'packets' (robots)

Biggest difference is robots -- unlike packets, they are finite, must be distributed around roboports (which act somewhat like routers) and can only traverse so much distance (or port 'hops') on a single charge, after which a penalty (based on congestion) is incurred. So obviously performance is a bit harder to quantify, but the components serve equivalent functions.

2)

Rseding91 wrote:You can already "solve" this by increasing the request amount.

-- somewhat true. The precise amount necessary depends on all the things which affect response latency (namely: roboport placement/topology, robot availability -- total # as well as location relative to request / requested resource, competing requests, and current level of congestion *only* if congestion is over a link which would be used by robot for this request), many of which are entirely transient (congestion due to concrete laying job), some of which remain roughly constant (requests in a network don't change all that much, but unfilled requests can vary largely).

3) Based on (1), we can say lognet transport protocol is 'connection oriented' like TCP (it's not exactly... but it again it behaves comparably). Also we may observe that the request size operates much like a sliding window protocol (

https://en.wikipedia.org/wiki/Sliding_window_protocol) in that it limits the number of bots which may be 'in flight' at one time.

And therefore we can say that to scale in response to network delay what we need to do is implement some kind of active rate negotiation. Thus to increase transmission bandwidth we need simply increase the window size. Note that without fine tuning this flies in the face of robonet preference for congestion avoidance. In response to congestion, window size will increase up to the maximum (which is the chest capacity).

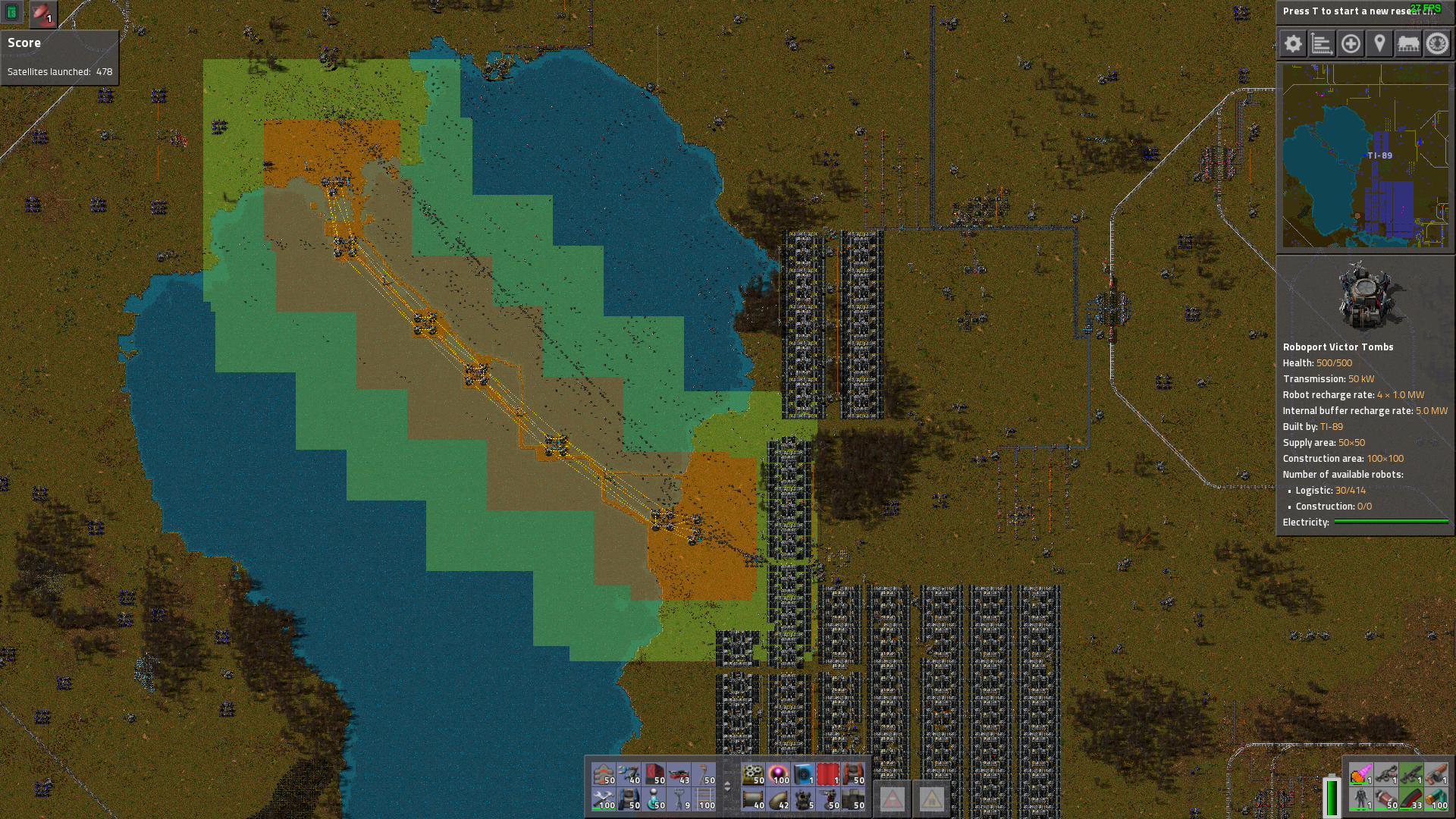

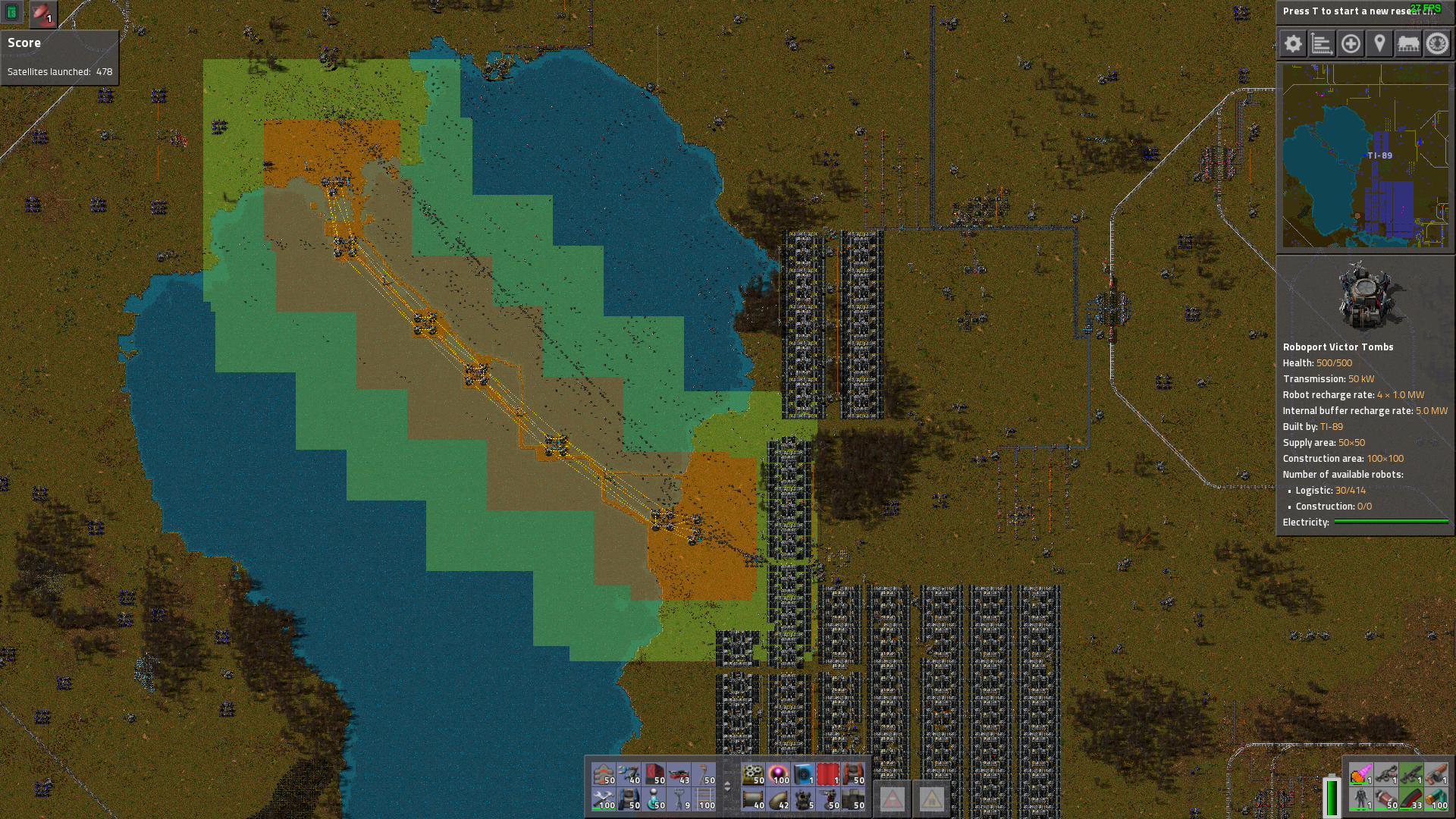

This can lead to poor performance in certain conditions. For example, in this toy network I built in response to a thread about bot pathing across/around lakes (which I still need to reply to... I decided I wanted to get rate negotiation done to max out this experiment, but I have not designed circuit yet). The lake-spanning network is separated from lake-circling one. Because bots remain in-air for so much longer going around lake than over, throughput is hard limited by the window size, even though window size is always chest size for providers. However this bandwidth limit exists only so long as I change non of the factors mentioned above -- in other words, I have quite a set of choices so far as how to optimize the network.

Anywho, that got longer than I intended, and it wasn't entirely on topic :/. But here's my first attempt at a diagram:

. I wrote in the combinator component I think I need, but have not gotten around to implementation and probably missed something. Basically I was thinking have a base response of e.g. one stack, then multiply by window size to get scaled request. Window size decreases when request is met. This would probably also take some tweaking to perform efficiently. Base request size and scaling factor may need to be varied based on the characteristics of the network the requestor is in.

. I wrote in the combinator component I think I need, but have not gotten around to implementation and probably missed something. Basically I was thinking have a base response of e.g. one stack, then multiply by window size to get scaled request. Window size decreases when request is met. This would probably also take some tweaking to perform efficiently. Base request size and scaling factor may need to be varied based on the characteristics of the network the requestor is in.

. I wrote in the combinator component I think I need, but have not gotten around to implementation and probably missed something. Basically I was thinking have a base response of e.g. one stack, then multiply by window size to get scaled request. Window size decreases when request is met. This would probably also take some tweaking to perform efficiently. Base request size and scaling factor may need to be varied based on the characteristics of the network the requestor is in.