Page 1 of 1

[Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Wed Oct 23, 2024 1:34 am

by ilbJanissary

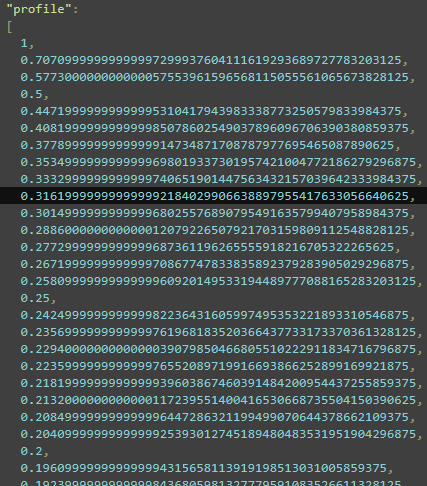

From the `--dump-data` file `data-raw-dump.json`. The beacon "profile" field in the JSON data dump has badly converted floats. I would consider this a minor issue, but it would be very nice if these were as clean as they are in the Lua state.

- 10-22-2024, 21-33-33.png (42.55 KiB) Viewed 333 times

Re: [2.0.8 + SA] Data dump JSON floats off

Posted: Wed Oct 23, 2024 6:21 pm

by Gweneph

Ok, I got nerd sniped by this one. At first I thought the decimals were just the exact representation of a double that was actually used... but they're not. They all seem to be exactly a tenth/fifth of a number that's exactly representable as double.

For the numbers to come out like this, the double to string converter must be using a conversion that looses precision. Something like this:

Code: Select all

def pp(f:float):

int_part=math.floor(f)

f-=int_part

s=str(int(int_part))

if f==0:

print(s)

return

s+="."

while f!=0:

f*=10

int_part=math.floor(f)

f-=int_part

s+=str(int(int_part))

print(s)

The result of the first multiply by ten will need to be rounded if the binary significand ends in a 1, which will be most (half? all? I'm not actually sure) of the time when the double came from a decimal number that's not a fraction with a power of 2 denominator.

In any case I'd love for the double to string converter to follow something like what python does where it rounds to the shortest representation that will round trip back to the same double. For example here's the 21 closest doubles to 0.1 with their full precision and what python prints them as by default:

Code: Select all

0.09999999999999986677323704498121514916419982910156250000 0.09999999999999987

0.09999999999999988065102485279567190445959568023681640625 0.09999999999999988

0.09999999999999989452881266061012865975499153137207031250 0.0999999999999999

0.09999999999999990840660046842458541505038738250732421875 0.09999999999999991

0.09999999999999992228438827623904217034578323364257812500 0.09999999999999992

0.09999999999999993616217608405349892564117908477783203125 0.09999999999999994

0.09999999999999995003996389186795568093657493591308593750 0.09999999999999995

0.09999999999999996391775169968241243623197078704833984375 0.09999999999999996

0.09999999999999997779553950749686919152736663818359375000 0.09999999999999998

0.09999999999999999167332731531132594682276248931884765625 0.09999999999999999

0.10000000000000000555111512312578270211815834045410156250 0.1

0.10000000000000001942890293094023945741355419158935546875 0.10000000000000002

0.10000000000000003330669073875469621270895004272460937500 0.10000000000000003

0.10000000000000004718447854656915296800434589385986328125 0.10000000000000005

0.10000000000000006106226635438360972329974174499511718750 0.10000000000000006

0.10000000000000007494005416219806647859513759613037109375 0.10000000000000007

0.10000000000000008881784197001252323389053344726562500000 0.10000000000000009

0.10000000000000010269562977782697998918592929840087890625 0.1000000000000001

0.10000000000000011657341758564143674448132514953613281250 0.10000000000000012

0.10000000000000013045120539345589349977672100067138671875 0.10000000000000013

0.10000000000000014432899320127035025507211685180664062500 0.10000000000000014

for comparison here are those same 21 doubles as converted by table_to_json (which I assume uses the same algorithm since it's behaving similarly):

Code: Select all

0.099999999999999857891452847979962825775146484375

0.099999999999999875655021241982467472553253173828125

0.09999999999999989341858963598497211933135986328125

0.099999999999999911182158029987476766109466552734375

0.0999999999999999289457264239899814128875732421875

0.0999999999999999289457264239899814128875732421875

0.09999999999999996447286321199499070644378662109375

0.09999999999999996447286321199499070644378662109375

0.099999999999999982236431605997495353221893310546875

0.099999999999999982236431605997495353221893310546875

0.1

0.10000000000000002220446049250313080847263336181640625

0.1000000000000000444089209850062616169452667236328125

0.1000000000000000444089209850062616169452667236328125

0.10000000000000006661338147750939242541790008544921875

0.10000000000000006661338147750939242541790008544921875

0.100000000000000088817841970012523233890533447265625

0.10000000000000011102230246251565404236316680908203125

0.10000000000000011102230246251565404236316680908203125

0.1000000000000001332267629550187848508358001708984375

0.1000000000000001332267629550187848508358001708984375

Notice that there's duplicates. That's because of the rounding on that first multiply by ten.

I believe the standard c++ way to achieve the cleaner output is with printf("%.17g", number). So that could probably be swapped in for whatever conversion is happening in the JSON library and as an added bonus it will probably be slightly faster.

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Mon Oct 28, 2024 1:11 pm

by Gweneph

I looked into how printf works and it has a bunch of stuff to handle full precision with multiprecision numbers. It seemed like it would actually be pretty slow, so I decided I'd try my hand at it:

Code: Select all

#include <iostream>

#include <cmath>

//precondition: pos points to somewhere in a buffer that has at

// least 30 more characters

//postcondition: pos points to a null character after the shortest decimal

// string representation that will convert back to n

void to_json(double n,char*& pos){

//todo: check NaN

if(n<0){

*(pos++)='-';

n=-n;

}

if(n==0){

*(pos++)='0';

*pos=0;

return;

}

//todo: check INF

//increase precision so we don't loose bits when changing power.

long double m = n;

//find power of decimal scientitfic form.

int e=0;

while(m>1){

m/=10;

e++;

}

while(m<1){

m*=10;

e--;

}

char* start_of_number = pos;

char* decimal_pos = pos+1;

if(e>20){//positive exponent

}else if(e>=0){//unlikely exponent, no leading zero

decimal_pos = pos+e+1;

}else if(e>-4){//no exponent, leading zero

*(pos++)='0';

*(pos++)='.';

for(int zeros=1; zeros<-e; zeros++){

*(pos++)='0';

}

}else{//negative expoent

}

//magic sauce: !!EDIT: this does a poor job (fails in both directions)

long double rounder=std::ldexp(m,-54)*1e16;

//get the first 16 digits

for(int significant_digits = 0; significant_digits < 16; significant_digits++){

if(pos==decimal_pos){

*(pos++)='.';

}

long double digit = std::floor(m);

m-=digit;

*(pos++)='0'+(char) digit;

m*=10;

}

//determine the 17th digit and truncate trailing zeros

//we only need precision to rounder,

// so if we can make the 17th digit zero, do so.

if(m+rounder >= 10){

//we're close enough we can round up to 16 digits

//this may cause repeated carrying

do{

pos--;

}while(pos>start_of_number && (*pos=='9' || *pos=='.'));

if(*pos=='9'){

//we had 99...99 get rounded up to 100...00

e++;

*pos='1';

}else{

*pos+=1;

}

}else if(m-rounder<=0){

//we're close enough we can round down to 16 digits

//this may lead to many trailing zeros

do{

pos--;

}while(*pos=='0' || *pos=='.');

}else{

//just round to the closest 17th digit

*pos='0'+(char) std::floor(m+0.5);

}

//each branch above leaves pos pointing to the last non-zero digit

pos++;

//deal with the required zeros / expoent

if(-4<e && e < pos-start_of_number+2){

//no expoent

//add back any required zeros (e.g. 34 -> 34000)

int zeros = e + 1 - (pos-start_of_number);

while(zeros-- >0){

*(pos++)='0';

}

*pos=0;

return;

}

//we have an expoent

if(e>0 && e<=20){

//we didn't add the decimal earlier

char* return_pos=pos+1;

decimal_pos=start_of_number+1;

while(pos>decimal_pos){

*pos = *(pos-1);

pos--;

}

*pos='.';

pos=return_pos;

}

*(pos++)='e';

if(e<0){

*(pos++)='-';

e=-e;

}

int exp_digits;

if(e>=100){

exp_digits=3;

}else if(e>=10){

exp_digits=2;

}else{

exp_digits=1;

}

std::div_t dv;

switch(exp_digits){

case 3:

dv = std::div(e,100);

*(pos++)='0'+dv.quot;

e=dv.rem;

//no break

case 2:

dv = std::div(e,10);

*(pos++)='0'+dv.quot;

e=dv.rem;

//no break

default:

*(pos++)='0'+e;

}

*pos=0;

}

int main()

{

//double test = 0.00000010000000000000001;

double test = 1234567891000;

char buff[30]={0};

char* pos=buff;

to_json(test,pos);

double check = std::stod(buff);

std::cout<< buff << std::endl << (check == test) << std::endl;

return 0;

}

It worked for everything I tried including MAX_DBL, MAX_DBL-e, and underflow numbers. Though for the underflow numbers it returned more digits than necessary. I didn't run any performance testing but I'd imagine it'd be pretty fast. Though since it uses a long double it probably will loose one bit of precision on the switch. I'm pretty sure it could be done with just an int64_t instead at the cost of a few more operations per loop. Oh and the JSON spec doesn't allow NaN or Inf, so I wasn't sure what would be useful there.

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Mon Oct 28, 2024 1:25 pm

by Rseding91

I was hoping to address this using std::to_chars and std::from_chars however it looks like because we support older MacOS versions the compiler for Mac doesn't support them. So for now, this will remain in minor issues.

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Tue Oct 29, 2024 9:56 pm

by vadcx

The standard-standard C99/C++ way would be to use hexadecimal floats

that map exactly to binary representation and don't have inaccuracy issues. Lua can interpret them since 5.2 according to manual, but the format would violate JSON. Yet I think this is important enough to output both formats (exact and approximated decimal).

https://gcc.gnu.org/onlinedocs/gcc/Hex-Floats.html

hexadecimal exponent notation:

https://en.cppreference.com/w/cpp/io/c/fprintf

However there are custom libraries out there for decimal representation:

https://github.com/miloyip/dtoa-benchmark In the Javascript world it might be smart to use the V8 engine routine.

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Tue Oct 29, 2024 10:00 pm

by Rseding91

As far as I've been told, JSON does not support hex floats. Even if we could support them easily when saving/loading from json it wouldn't "follow the spec" and so i"ve been told we can't.

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Tue Oct 29, 2024 10:04 pm

by vadcx

Yeah correct, I propose the hex float to be saved as string alongside the usual decimal value. Though it depends on consumers if anyone really relies on accurate floats here?

Re: [Rseding91] [2.0.8 + SA] Data dump JSON floats off

Posted: Sat Nov 02, 2024 4:15 pm

by Gweneph

Hex floats are really only helpful for debugging purposes. 17 decimal digits is enough to guarantee being able to round trip back to the same float. The only hard part about it is trying to know when rounding to 16 digits will also round trip back to the same number. My code above gets kinda close, but on further inspection it's sometimes wrong in both directions so sometimes it's off by one bit in the round trip and sometimes it gives a longer decimal representation than required. In my hunt for additional information I stumbled on this library:

https://github.com/abolz/Drachennest?tab=readme-ov-file

I think it's likely that at least some of the algorithms in the repo should compile for all your targets even if std::to_chars won't.