Friday Facts #330 - Main menu and File Share Shenanigans

Re: Friday Facts #330 - Main menu and File Share Shenanigans

On the CONTINUE selection, my recommendation is to have some sort description of what you are continuing, such as single player with file name or multi-player with server name.

Although many do play through Steam, I would like to see way for someone to join a multi-player game without having to go through Steam, but authenticated through Webe, or by the server administrator.

On player screen names, can be an issue with multi user game where players use inappropriate screen names, such as "... fill in the blank here ...". Who makes the determination that the screen name is inappropriate, and how will that be handled by all parties, i.e. Webe, server operator, player? Similarly, how will avatar (screen name) collision were two users want to use the same screen name be handled.

Hiladdar

Although many do play through Steam, I would like to see way for someone to join a multi-player game without having to go through Steam, but authenticated through Webe, or by the server administrator.

On player screen names, can be an issue with multi user game where players use inappropriate screen names, such as "... fill in the blank here ...". Who makes the determination that the screen name is inappropriate, and how will that be handled by all parties, i.e. Webe, server operator, player? Similarly, how will avatar (screen name) collision were two users want to use the same screen name be handled.

Hiladdar

Re: Friday Facts #330 - Main menu and File Share Shenanigans

I think it would be the right moment to implement viewtopic.php?f=6&t=65715&p=402545 (master sliders for resources), given the menu rework.

- BlueTemplar

- Smart Inserter

- Posts: 2420

- Joined: Fri Jun 08, 2018 2:16 pm

- Contact:

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Not the same menu, but definitely, yes, please !

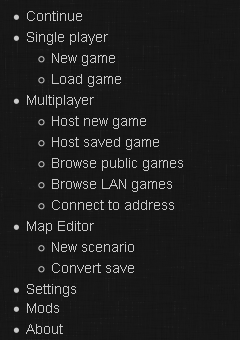

If I'm not mistaken :

- "Browse public games" is short for "Browse games for which Wube servers are doing matchmaking", and

- "Browse LAN games" searches for all games in your Local Area Network (which might be a virtual, over-the-Internet one, through services like GameRanger)

- "Connect to address" allows you to just type (or paste in) server IP (would IPv6 work ?) to connect to any game on the Internet (or LAN).

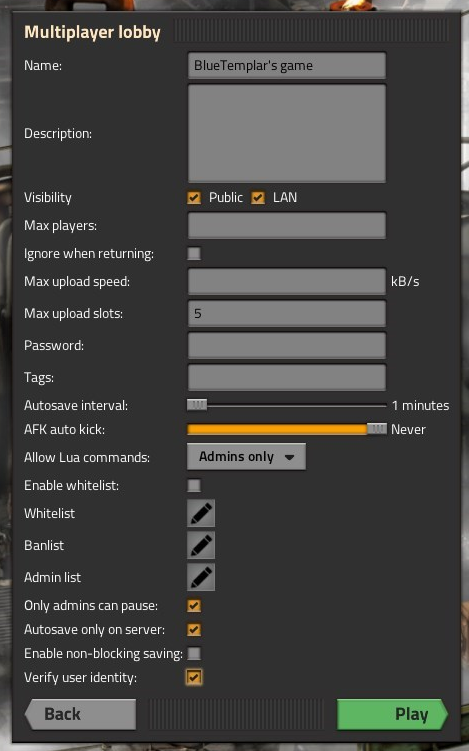

Then AFAIK, if the host of the game has checked the "Verify user identity" option (even without "Public Visibility"), the game will connect to Wube's servers to authentify your account.

From what I understand, neither now nor after this change will Steam servers get involved at this point ? (They are involved, "upstream", to go from a Steam account to a Factorio full/mini account ?)

If I'm not mistaken :

- "Browse public games" is short for "Browse games for which Wube servers are doing matchmaking", and

- "Browse LAN games" searches for all games in your Local Area Network (which might be a virtual, over-the-Internet one, through services like GameRanger)

- "Connect to address" allows you to just type (or paste in) server IP (would IPv6 work ?) to connect to any game on the Internet (or LAN).

Then AFAIK, if the host of the game has checked the "Verify user identity" option (even without "Public Visibility"), the game will connect to Wube's servers to authentify your account.

From what I understand, neither now nor after this change will Steam servers get involved at this point ? (They are involved, "upstream", to go from a Steam account to a Factorio full/mini account ?)

BobDiggity (mod-scenario-pack)

-

RexTheCapt

- Burner Inserter

- Posts: 8

- Joined: Sun Jul 10, 2016 3:57 pm

- Contact:

Re: Friday Facts #330 - Main menu and File Share Shenanigans

I really recommend you guys to get help in building an NAS from LinusTechTips. They have some really good stuff on they're youtube channel. They have a few videos about building your own super NAS too.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

As SeeNo already mentioned..

No exit game anymore from the menu

No exit game anymore from the menu

Re: Friday Facts #330 - Main menu and File Share Shenanigans

I used ZFS back when I was using Sparc-based Sun Boxes on Solaris 10. It worked well. But every time I have used ZFS outside of sparc Solaris, it has been a shitshow. My own father works for Oracle and used ZFS for years as a field support technician (IE: he fixes ZFS for customers, among other things) and even he lost about a month of time migrating data out of his zpool when he had to change from the now-defunct x86_64 version of Solaris to ZFS on Linux. The simple fact of the matter is that ZFS is ONLY good on oracle products and within Oracle's ecosystem. FreeNAS may also be good, since the BSD license allows for use of the 'real' ZFS source code.

Linus is not wrong. It *is* a bad file system. It's very easy to lose data {example to follow}, and all the stuff people point to and praise it for having (ARC, copies, self-healing, etc) don't work nearly as well as you think they should. Examples:

I'm just trying to save you guys headaches down the road. Truly.

Linus is not wrong. It *is* a bad file system. It's very easy to lose data {example to follow}, and all the stuff people point to and praise it for having (ARC, copies, self-healing, etc) don't work nearly as well as you think they should. Examples:

- ARC, for example, will eat up 25% of your system memory, but you'll rarely, if ever, have a single read or write that is faster than drive-speed in any scenario.

- Despite having 4 drives that *should* be able to write at ~450MB/s, you'll be lucky to see half that. Same with reads, even on large files. Other file systems, and even normal hardware raid cards (good ones; that have battery backups) can beat ZFS's performance here.

- The ONLY way to get near a drive's peak performance is to create a JBOD of disks with each disk as its own vdev. This means that copies=2 does NOT protect you from a drive failure and self-healing is disabled as there are no copies of the data anywhere.

- On losing data: If you do a zfs upgrade or zpool upgrade and then find out that some other change (udev upgrade, BIOS incompatibility, etc) makes it so that you can't boot your system, then your data is now trapped inside a zpool you cannot access. You have to find a way to mount that zpool on another machine that *can* run the newer kernel without crashing. The data should still be there, but losing access to data for a week is just as big a problem as losing it forever.

- The dedup feature is a joke at best. The number of cases in which it will save you space is laughable, and the memory cost of turning it on is horrific.

I'm just trying to save you guys headaches down the road. Truly.

- BlueTemplar

- Smart Inserter

- Posts: 2420

- Joined: Fri Jun 08, 2018 2:16 pm

- Contact:

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Philip017 wrote: ↑Fri Jan 17, 2020 8:28 pm[...]

as for any NAS system, i have never used one, and heard of them failing far too frequently, and with their proprietary file systems and data encryption, when the nas crashes it makes retrieving your files much more complex than if you use a standard system, (NTFS for windows, or ext4 for linux), even if you have your files encrypted, using a standard file system and encryption method, means less down time and quicker access to your files when a hardware failure occurs. get a reliable PC, or server board, a case that can handle the number of drives you need, and set it up yourself so you know how it works and what hardware and software it is using.

[...]

Hmm, aren't there, by this point, "plug-and-play" home / small business NASes, that work with either NTFS or ext4, and which are RAID-ed, so you just have to swap a disk when one fails ?Kiu wrote: ↑Fri Jan 17, 2020 5:39 pmHad some QNAP and Netgear stuff in the past (was in the company already) and had alot of fun with that *CENCORED*...

Synology is OK, but you have to take care about not using to many features/apps. At least, if something went south, there are manuals how to access your data with a common linux (if you avoid those syno-raid-stuff). And don't use desktop drives.

FreeNAS/ZFS if you want to build something with a nice UI (Supermicro has some nice cases)

And if things get "interesting" - some kind of Ceph cluster (SuSE Enterprise Storage etc)...

[...]

And are just network drives using standard protocols, so there are no issues with proprietary software, even with the technically proprietary NTFS because there's an open source driver for it now ?

(And can't RAID disks just be used "standalone", it's syncing them again that might get complex if you ever decide to separate them ?)

BobDiggity (mod-scenario-pack)

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Personally, I think there should be two continue buttons for single- and multi- player.

It is quite common to switch between these two modes. E.g. if you friend calls it a day and you want to play some more or if you just loading single-player for a moment to check something.

Also, do you already have an official bet with the Satisfactory team on who is going to release version 1.0 first?

It is quite common to switch between these two modes. E.g. if you friend calls it a day and you want to play some more or if you just loading single-player for a moment to check something.

Also, do you already have an official bet with the Satisfactory team on who is going to release version 1.0 first?

I am a translator. And what did you do for Factorio?

Check out my mod "Realistic Ores" and my other mods!

Check out my mod "Realistic Ores" and my other mods!

Re: Friday Facts #330 - Main menu and File Share Shenanigans

The closest thing to this is something like 45-drives (what Linus Tech Tips uses; and that is FAR outside the price/scope of what Wube needs!) or a backblaze pod (cheaper than 45-drives, but still overkill for what Wube needs). The fundamental problem is value engineering. They make those NAS boxes as cheap as possible, and to do that, they cut corners. Cut enough corners and you end up with a circle instead of a square.BlueTemplar wrote: ↑Fri Jan 17, 2020 10:34 pmHmm, aren't there, by this point, "plug-and-play" home / small business NASes, that work with either NTFS or ext4, and which are RAID-ed, so you just have to swap a disk when one fails ?

And are just network drives using standard protocols, so there are no issues with proprietary software, even with the technically proprietary NTFS because there's an open source driver for it now ?

(And can't RAID disks just be used "standalone", it's the syncing them again that might get complex if you ever decide to separate them ?)

RAID writes the data to the hard drive in such a way that, unless you are using software mirroring (RAID 1), the data will be useless outside of the RAID set. Hardware RAID 1 would also be unreadable. The reason software RAID 1 can be read is something of a mystery to me, but I've done it enough times to know it works. Imagine having 3 notebooks. You write the sentence "I love pink ponies they make me happy." by striping one word in each notebook. So book 1 has "I ponies me", book 2 has "love they happy", and book 3 has "pink make". If you pulled out just notebook 2, the information wouldn't make any sense. This is why you can't pull one drive out of a RAID set (except RAID 1, sometimes) and still use it.

- MasterBuilder

- Filter Inserter

- Posts: 348

- Joined: Sun Nov 23, 2014 1:22 am

- Contact:

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Already mentioned but...

Where's the exit button?! I need my exit button! Gotta have more exit button!

Where's the exit button?! I need my exit button! Gotta have more exit button!

Give a man fire and he'll be warm for a day. Set a man on fire and he'll be warm for the rest of his life.

- BlueTemplar

- Smart Inserter

- Posts: 2420

- Joined: Fri Jun 08, 2018 2:16 pm

- Contact:

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Wait, isn't "RAID" short for Redundant Array of Independent Drives ? That seems neither redundant, nor independent to me !Kingdud wrote: ↑Fri Jan 17, 2020 10:41 pm[...]Imagine having 3 notebooks. You write the sentence "I love pink ponies they make me happy." by striping one word in each notebook. So book 1 has "I ponies me", book 2 has "love they happy", and book 3 has "pink make". If you pulled out just notebook 2, the information wouldn't make any sense. This is why you can't pull one drive out of a RAID set (except RAID 1, sometimes) and still use it.

BobDiggity (mod-scenario-pack)

Re: Friday Facts #330 - Main menu and File Share Shenanigans

How many years have you been out of IT? You realize RAID 5 has been obsolete for years now? And you advise software raid, but not mdadm or zfs? What exactly are you trying to say?Kingdud wrote: ↑Fri Jan 17, 2020 10:32 pmI used ZFS back when I was using Sparc-based Sun Boxes on Solaris 10. It worked well. But every time I have used ZFS outside of sparc Solaris, it has been a shitshow. My own father works for Oracle and used ZFS for years as a field support technician (IE: he fixes ZFS for customers, among other things) and even he lost about a month of time migrating data out of his zpool when he had to change from the now-defunct x86_64 version of Solaris to ZFS on Linux. The simple fact of the matter is that ZFS is ONLY good on oracle products and within Oracle's ecosystem. FreeNAS may also be good, since the BSD license allows for use of the 'real' ZFS source code.

Linus is not wrong. It *is* a bad file system. It's very easy to lose data {example to follow}, and all the stuff people point to and praise it for having (ARC, copies, self-healing, etc) don't work nearly as well as you think they should. Examples:

Fundamentally, you guys went in the right direction by building your own fileserver. Just, get off ZFS. Please. For your own sakes. It's a time bomb waiting to take your data down again. Just stick with software RAID 5. The software part is key; hardware cards will be faster, but software RAID is nearly ubiquitous. mdadm isn't especially stable, so avoid that. Specifically, mdadm likes to randomly kick drives out of the RAID set without a good reason. My #1 task when I was a unix admin was responding to automated e-mails that mdadm had ejected yet another drive from the RAID set for god knows what reason. One in 5 times it was due to an actual hardware problem, but normally it was just mdadm being temperamental.

- ARC, for example, will eat up 25% of your system memory, but you'll rarely, if ever, have a single read or write that is faster than drive-speed in any scenario.

- Despite having 4 drives that *should* be able to write at ~450MB/s, you'll be lucky to see half that. Same with reads, even on large files. Other file systems, and even normal hardware raid cards (good ones; that have battery backups) can beat ZFS's performance here.

- The ONLY way to get near a drive's peak performance is to create a JBOD of disks with each disk as its own vdev. This means that copies=2 does NOT protect you from a drive failure and self-healing is disabled as there are no copies of the data anywhere.

- On losing data: If you do a zfs upgrade or zpool upgrade and then find out that some other change (udev upgrade, BIOS incompatibility, etc) makes it so that you can't boot your system, then your data is now trapped inside a zpool you cannot access. You have to find a way to mount that zpool on another machine that *can* run the newer kernel without crashing. The data should still be there, but losing access to data for a week is just as big a problem as losing it forever.

- The dedup feature is a joke at best. The number of cases in which it will save you space is laughable, and the memory cost of turning it on is horrific.

I'm just trying to save you guys headaches down the road. Truly.

The amount of IT 'experts' on this post make me reconsider the average factorio player...

One that confuses RAID 0 with RAID 1, one that recommends RAID 5, one that thinks LTT knows storage, others recommending unRAID, and the number of people running QNAP. Anyway, glad Wube got their NAS needs met!

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Independent has multiple meanings. Meaning 2 of 4 on google is "not depending on another for livelihood or subsistence", which is what you're thinking it means (modified slightly to the context of computers instead of humans, but meh). Meaning 4 of 4 on google is what it actually means in this context "not connected with another or with each other; separate". The difference being that the drives themselves are discrete units, rather than that each drive is working together but capable of operating without support from its peers.BlueTemplar wrote: ↑Fri Jan 17, 2020 10:49 pmWait, isn't "RAID" short for Redundant Array of Independent Drives ? That seems neither redundant, nor independent to me !

So...imagine you're cooking potatoes. Let's say they are the 'little' potato variety such that each potato is only 1-2" in diameter. If you were to cook and eat only a single potato, you'd be rather annoyed with life. But if you cooked and ate 10, then you'd have a serviceable side-item for your meal. Each individual potato is independent and unique. It can have its own shape, size, color, texture, etc. Much like how you can make a RAID set of drives from WD, Hitachi, Toshiba, Seagate; all with different speeds and sizes (I said can, not should!). Before RAID was a concept you had to have all matching hardware from the same vendor, and frequently the drives were serialized or indexed in such a way that you couldn't just move drive 4 to slot 5, it would only work in slot 4 for ~reasons~. RAID, as a concept, tried to make large scale disk collections more plug and play, moving much of the management complexity to software instead of hardware.

Make sense?

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Not all Raid levels use striping and different Raid levels give you different guarantees for your data redundancy when it comes to drive failure (or drive removal).Kingdud wrote: ↑Fri Jan 17, 2020 10:41 pmRAID writes the data to the hard drive in such a way that, unless you are using software mirroring (RAID 1), the data will be useless outside of the RAID set. Hardware RAID 1 would also be unreadable. The reason software RAID 1 can be read is something of a mystery to me, but I've done it enough times to know it works. Imagine having 3 notebooks. You write the sentence "I love pink ponies they make me happy." by striping one word in each notebook. So book 1 has "I ponies me", book 2 has "love they happy", and book 3 has "pink make". If you pulled out just notebook 2, the information wouldn't make any sense. This is why you can't pull one drive out of a RAID set (except RAID 1, sometimes) and still use it.

To explain a few:

RAID0 usually does stripe data, it does not make copies (or use parity), if any drive fails, all data may be lost or unusable

RAID1 generally does not stripe, it copies every bit (or words if I go by your example) to every drive in the array. As long as at least one drive works, you still have all data.

RAID5 usually stripes. Uses parity for redundancy which is a bit more space efficient than plain copies. But for that it needs at least 3 drives, of which one can fail. If two fail, data is lost.

So what you explained in your example was RAID0

EDIT:

In regards to ZFS: At work we used it for storing the virtual harddrives for virtual machines. Decision was made b/c we could use some small SSDs for cache and some large HDDs for space. In theory performance should have been better than HDDs alone and a bit slower than going SSD only. In practice if you simultaneously started `apt update` on more than 3 VMs, performance was so bad it disrupted all other things doing I/O. Even ZFS on linux devs could not tell us what was wrong with our setup.

So in my experience ZFS and Linux don'T mix well...

Last edited by oi_wtf on Fri Jan 17, 2020 11:26 pm, edited 3 times in total.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

That's not very nice. Come say that to my face. Remember the human. Now then, every single major storage array is STILL based on RAID 5. DellEMC's Unity (VNX), VMAX, XtremIO, and Isilon arrays are all based on RAID 5 under the covers. Dell's Compellant and PS-series arrays both use RAID 5 (or 6) by default. Pure storage uses RAID 5 internally. Tegile (Western Digital), Netapp, Hitachi, etc...literally every single enterprise storage array still uses RAID 5 internally on their drives.bc74sj wrote: ↑Fri Jan 17, 2020 10:56 pmHow many years have you been out of IT? You realize RAID 5 has been obsolete for years now? And you advise software raid, but not mdadm or zfs? What exactly are you trying to say?

The amount of IT 'experts' on this post make me reconsider the average factorio player...

One that confuses RAID 0 with RAID 1, one that recommends RAID 5, one that thinks LTT knows storage, others recommending unRAID, and the number of people running QNAP. Anyway, glad Wube got their NAS needs met!

So, no, I am not wrong. RAID 5 was not deprecated. It is no longer recommended for large, slow, hard drives where it would take > 24 hours to rebuild a single drive failure. That's why every single enterprise storage company has switched over to SSD-only storage. The few that still sell spinning hard drive based storage are, more and more, defaulting to RAID 6 because the rebuild times on their large capacity drives are so long. And, if you happen to buy a hard drive based array, and have a problem with a rebuild, they immediately try to sell you on SSD because they know that the HD based models have huge problems.

Unraid doesn't solve the rebuild time problem. Neither does ZFS. So...no, I'm not wrong. At all. Be a nicer person, not an asshole with a keyboard.

Last edited by Kingdud on Fri Jan 17, 2020 11:22 pm, edited 1 time in total.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

My example covers both RAID 0 and RAID 5. I specifically mentioned, 3 times, that RAID 1 was different and actually *could* (potentially) be used without its other member RAID disk. Re-read what I wrote again.oi_wtf wrote: ↑Fri Jan 17, 2020 11:05 pmNot all Raid levels use striping and different Raid levels give you different guarantees for your data redundancy when it comes to drive failure (or drive removal).Kingdud wrote: ↑Fri Jan 17, 2020 10:41 pmRAID writes the data to the hard drive in such a way that, unless you are using software mirroring (RAID 1), the data will be useless outside of the RAID set. Hardware RAID 1 would also be unreadable. The reason software RAID 1 can be read is something of a mystery to me, but I've done it enough times to know it works. Imagine having 3 notebooks. You write the sentence "I love pink ponies they make me happy." by striping one word in each notebook. So book 1 has "I ponies me", book 2 has "love they happy", and book 3 has "pink make". If you pulled out just notebook 2, the information wouldn't make any sense. This is why you can't pull one drive out of a RAID set (except RAID 1, sometimes) and still use it.

To explain a few:

RAID0 usually does stripe data, it does not make copies (or use parity), if any drive fails, all data may be lost or unusable

RAID1 generally does not stripe, it copies every bit (or words if I go by your example) to every drive in the array. As long as at least one drive works, you still have all data.

RAID5 usually stripes. Uses parity for redundancy which is a bit more space efficient than plain copies. But for that it needs at least 3 drives, of which one can fail. If two fail, data is lost.

So what you explained in your example was RAID0 not RAID1, which has no redundancy as a few people correctly noticed.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

oh, sorry, missed that bit.

Removing only the "not RAID1" bis from the last sentence makes it correct, though.

Last edited by oi_wtf on Fri Jan 17, 2020 11:31 pm, edited 2 times in total.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Eh, yea. RAID 5 does have parity data, but if my notebook example had 'word word <jibberish>' it'd just confuse the guy I was trying to help. And, functionally, data is encoded on RAID 5 the same way that it is in RAID 0 (assuming the stripe sizes/disk counts are the same). It's just you do one extra write of parity data. I didn't feel like being that pedantic. Few people *really* need to know that. And those that do tend to learn it pretty fast.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

I mainly just wanted to explain that "mystery" of RAID1...Kingdud wrote: ↑Fri Jan 17, 2020 11:29 pm

Eh, yea. RAID 5 does have parity data, but if my notebook example had 'word word <jibberish>' it'd just confuse the guy I was trying to help. And, functionally, data is encoded on RAID 5 the same way that it is in RAID 0 (assuming the stripe sizes/disk counts are the same). It's just you do one extra write of parity data. I didn't feel like being that pedantic. Few people *really* need to know that. And those that do tend to learn it pretty fast.

Reading data from a single drive that was or is part of an RAID1 array works because RAID1 guarantees you it'll work with an array that degraded to a single drive.

Re: Friday Facts #330 - Main menu and File Share Shenanigans

Oh, no, the mystery to me is that there's metadata that a hardware raid card writes to the drive that software either doesn't, or writes somewhere else such that it will work with RAID disabled. That's the mystery to me, where is that metadata, and why is it there. I've just never cared enough to google the answer. The day I need to repair a RAID 1 set I'll look into it. :poi_wtf wrote: ↑Fri Jan 17, 2020 11:32 pmI mainly just wanted to explain that "mystery" of RAID1...Kingdud wrote: ↑Fri Jan 17, 2020 11:29 pm

Eh, yea. RAID 5 does have parity data, but if my notebook example had 'word word <jibberish>' it'd just confuse the guy I was trying to help. And, functionally, data is encoded on RAID 5 the same way that it is in RAID 0 (assuming the stripe sizes/disk counts are the same). It's just you do one extra write of parity data. I didn't feel like being that pedantic. Few people *really* need to know that. And those that do tend to learn it pretty fast.

Reading data from a single drive that was or is part of an RAID1 array works because RAID1 guarantees you it'll work with an array that degraded to a single drive.