Page 4 of 5

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 2:46 pm

by ikarikeiji

Zaflis wrote:pleegwat wrote:Doesn't work if you're actually destroying entities outside their own update call though, because you may end up swapping an entity which acted this cycle with one which has not.

Not if you iterate the vector from end to beginning. Some pseudo if you wanted to make particle system:

Code: Select all

for (p = PCount-1; p >= 0; p--)

particle[p].DealWithIt()

particle[p].life--

if particle[p].life <= 0 then

PCount--

particle[p] = particle[PCount]

particle.deleteLast()

end

end

What you get is every single particle is dealt with exactly once on every loop, and deleting one means just one swap operation with the last. On the downside the list won't keep the original order. It is only a problem if you need sorting. But on a free list of entities that doesn't sound like being the case. In case of particles aswell the order doesn't matter.

+1 to this. You can also append something to the vector without any trouble. Actual workable C++:

Code: Select all

std::vector<Entity*> active_entities;

void step_all_active() {

for (size_t n = active_entities.size()-1; n >= 0; n--) {

Entity *&slot = active_entities[n];

slot->step();

if (!slot->is_active()) {

slot = active_entities.back();

active_entities.pop_back();

}

}

}

void SomeDerivedEntity::step() {

// ... do some updating...

if (do_we_need_to_make_another_entity_active) {

Entity &other_entity = the_other_entity;

if (!other_entity.is_active()) {

other_entity.set_active(true);

active_entities.push_back(&other_entity);

}

}

}

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 4:58 pm

by Gymble

ikarikeiji wrote:Zaflis wrote:pleegwat wrote:Doesn't work if you're actually destroying entities outside their own update call though, because you may end up swapping an entity which acted this cycle with one which has not.

Not if you iterate the vector from end to beginning. Some pseudo if you wanted to make particle system:

Code: Select all

for (p = PCount-1; p >= 0; p--)

particle[p].DealWithIt()

particle[p].life--

if particle[p].life <= 0 then

PCount--

particle[p] = particle[PCount]

particle.deleteLast()

end

end

What you get is every single particle is dealt with exactly once on every loop, and deleting one means just one swap operation with the last. On the downside the list won't keep the original order. It is only a problem if you need sorting. But on a free list of entities that doesn't sound like being the case. In case of particles aswell the order doesn't matter.

+1 to this. You can also append something to the vector without any trouble. Actual workable C++:

Code: Select all

std::vector<Entity*> active_entities;

void step_all_active() {

for (size_t n = active_entities.size()-1; n >= 0; n--) {

Entity *&slot = active_entities[n];

slot->step();

if (!slot->is_active()) {

slot = active_entities.back();

active_entities.pop_back();

}

}

}

void SomeDerivedEntity::step() {

// ... do some updating...

if (do_we_need_to_make_another_entity_active) {

Entity &other_entity = the_other_entity;

if (!other_entity.is_active()) {

other_entity.set_active(true);

active_entities.push_back(&other_entity);

}

}

}

Except that in this case the added entity will not be processed during the current pass.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 7:17 pm

by ikarikeiji

Gymble wrote:Except that in this case the added entity will not be processed during the current pass.

I suspect you wouldn't want to: if you did, you could end up with an infinite loop if (somehow) each entity decided that activating another entity was necessary, especially if that entity had already been processed.

Conversely, note that entities would always be processed on the pass where they get marked as inactive. So that would "make up" for not processing them when they are activated.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 7:41 pm

by mOoEyThEcOw

mensinda wrote:

Have you considered using PLF Colony (

http://plflib.org/colony.htm)? PLF Colony is an

unordered container that basically has most benefits of std::vector (memory locality) and std::list (O(1) insert and remove).

+1 to this one. Avoid rolling your own data structures is always a good rule, and this one beats trying to use the wrong data structures in specific patterns to ensure properties.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 8:16 pm

by Avezo

Would you tell us more about new team members?

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 9:15 pm

by Zulan

mensinda wrote:kovarex wrote:

Actually, one possibility could be to have a vector of ActiveEntity poitners and remove from it by switching with the last one. The entity would know the index of its position in the vector. This way you still have O(1) removal, instead of 2 pointers, you have 1 index, and you only have to touch one entity when removing, not the 2 neighbours.

Have you considered using PLF Colony (

http://plflib.org/colony.htm)? PLF Colony is an

unordered container that basically has most benefits of std::vector (memory locality) and std::list (O(1) insert and remove).

I recommend the website and this video:

https://www.youtube.com/watch?v=wBER1R8YyGY for mor information.

Colony is a very interesting data structure and the talk is excellent. However, I don't think it (directly) applies here. If I understand it correctly, Colonies cover insertion and erasure. Updatable entities can be inactive - they still exist, but they do not participate in the update loop. Also I don't think Colonies cover polymorphic types.

So I maybe the jump-count skip lists could be interesting here. But ownership and updating is done on different scope - so that doesn't even match. If the ownership / lifetime is not changed, Kovarex' idea is probably the best to try.

By the way, generally the active state of entities changes very often (an Inserter is only active while moving). If I understand it correctly, the lifetime of entities is mostly not so dynamic.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sat Aug 19, 2017 9:28 pm

by Zulan

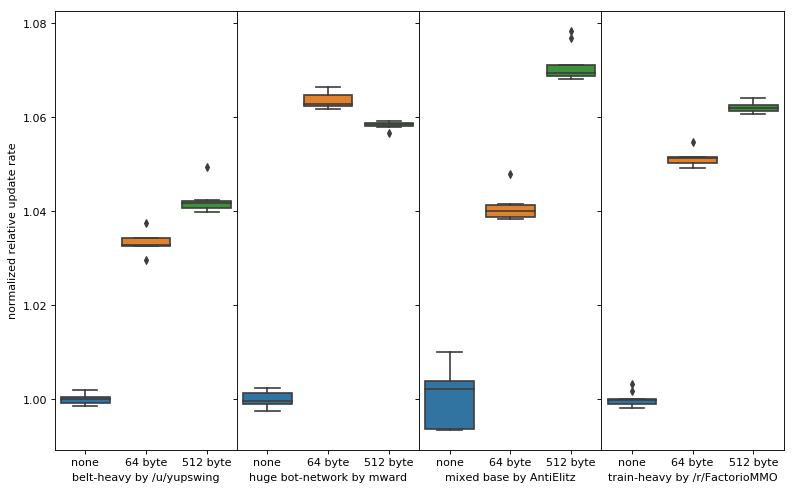

Here's the same measurements from an somewhat older AMD A10-7850K:

- prefetch_amd_kaveri.png (17.25 KiB) Viewed 6515 times

It's about twice as slow as the Skylake. Prefetching still helps very well, although not quite to the extent of current Intel architectures.

I don't think the prefetching hint is as specific and uarch-dependent as the non-temporal stores. Even if the hardware prefetcher already does a good job, there would only be tiny overhead from extra redundant instructions. Now it's impossible to exclude some weird interactions within the uarch. So yes, benchmarking is the best way to go

.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 5:54 am

by TheTom

Zulan wrote:Here's the same measurements from an somewhat older AMD A10-7850K:

Can someone try that on a current ZEN core?

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 6:29 am

by Artman40

Are these 2% improvements cumulative rather than separate, unlike mining productivity upgrades?

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 9:22 am

by Zulan

Artman40 wrote:Are these 2% improvements cumulative rather than separate, unlike mining productivity upgrades?

The ItemStack improvements are already included in the baseline for the reported prefetching measurements. Therefore the combined percentage improvement is the product of both individual percentage improvements.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 9:49 am

by AtomicStryker

Can i just state how awesome it is that you squeeze your code for performance like this when just about any AAA studio goes "meh, they'll buy better computers" instead.

Or just leave their engines horrible messes like GTA 4...

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 9:54 am

by Engimage

Zulan you should definitely get purple

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 11:35 am

by vanatteveldt

PacifyerGrey wrote:Zulan you should definitely get purple

This

Also kudos for not just posting technical details on a public forum (which always makes you vulnerable to criticism from the nerdy classes (including yours truly

) since any design is a tradeoff; but then going into intensive discussion with the people who respond. I really hope that you might get some new good ideas of realizations from the discussion, you guys deserve it!

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 7:13 pm

by ili

TheTom wrote:Zulan wrote:Here's the same measurements from an somewhat older AMD A10-7850K:

Can someone try that on a current ZEN core?

I can run it on my PC if someone can tell me how to make this benchmark

Re: Friday Facts #204 - Another day, another optimisation

Posted: Sun Aug 20, 2017 9:42 pm

by ratchetfreak

Rseding91 wrote:Rakshasa wrote:You guys are using... linked lists?...

Why, oh why do you use linked lists? It is supposed to be common knowledge that in almost all situations even a straight vector is better.

At the very least something like C++'s deque would be better.

Tell me how you'd get: O(1) time to turn an entity on/off and use a vector to maintain which entities are active and allow an entity to go active/inactive during the update loop as you iterate active entities.

You can't have them all

By using a linked list with the entity itself being the link you have O(1) time to turn any entity on/off and can iterate all active entities while still allowing other entities to go active/inactive during the update loop - you can't do that with a vector as mutating the vector while iterating isn't allowed.

As with virtually everything programming: you can't use absolutes - as you said "in almost all situations" -> this isn't one of them

O(n) operation for iteration+delete:

Code: Select all

update_list.erase(std::remove_if(update_list.begin(), update_list.end(), [=](Entity* e){e->update(performance);return !e->needsUpdateNextTick();}, update_list.end());

And push_back to add. (to a temporary vector and append the new entities after.

Also it may help to split the entities into stuff that needs to be touched every frame and stuff that doesn't.

If you can make that part that needs to be touched every frame fixed size for a wide variety of your entities then you can put that in a vector by value and avoid the linked list.

For example a powered entity doing stuff (assembler crafting, inserter spinning, ...) just needs to know how much power it gets per tick and if the timer (modulated by how much power it actually got) is done. If it is done only then does the full entity need to be touched. Well then and when it needs to be rendered.

If it's just waiting on items then it shouldn't be in the active list at all.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Mon Aug 21, 2017 9:07 am

by rgx

Since we are still on the subject of linked lists (with full address to neighbours stored in the objects), an advantage is that it allows entities to be moved in memory, with small (or no) changes to the simulation code. So if the problem is that entitiies are misaligned and randomly scattered in memory, the active entities (around 100 MB out of several GB perhaps) can be moved to one, contiguos block. And they can be ordered in the order that they are accessed during the simulation. This process could be automated, much like defragmentation of a disk.

It's just a thought, sorry of this has been suggested before in any of the many threads about optimization - I have not read all of them.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Mon Aug 21, 2017 9:59 am

by Zulan

rgx wrote:Since we are still on the subject of linked lists (with full address to neighbours stored in the objects), an advantage is that it allows entities to be moved in memory, with small (or no) changes to the simulation code. So if the problem is that entitiies are misaligned and randomly scattered in memory, the active entities (around 100 MB out of several GB perhaps) can be moved to one, contiguos block. And they can be ordered in the order that they are accessed during the simulation. This process could be automated, much like defragmentation of a disk.

Not really - there are still lots of dependencies between entities (e.g. wakeup lists). Moving stuff in memory is really difficult. I tried. Even if you get the object->object references right, there can still be references on the stack...

Re: Friday Facts #204 - Another day, another optimisation

Posted: Mon Aug 21, 2017 1:43 pm

by rgx

Hmmmm. So the code has gradually moved away from accessing and manipulating the data in the form of double-linked lists, for performance reasons. Part of the code, I'm guessing save/load and drawing/zooming maybe, are still browsing through the lists node by node. The code doing most of the work is referencing nodes directly, through tables or possibly other lists with references. By chunks perhaps. It is kind of a hybrid structure - not necessarily a bad thing because every structure will be a compromise regardless, favouring one aspect over others.

In any case, I do hope you find a way to solve the problem. There must be a way, only not as easy as I thought.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Mon Aug 21, 2017 7:24 pm

by TheTom

Zulan wrote:Here's the same measurements from an somewhat older AMD A10-7850K:

prefetch_amd_kaveri.png

It's about twice as slow as the Skylake. Prefetching still helps very well, although not quite to the extent of current Intel architectures.

I don't think the prefetching hint is as specific and uarch-dependent as the non-temporal stores. Even if the hardware prefetcher already does a good job, there would only be tiny overhead from extra redundant instructions. Now it's impossible to exclude some weird interactions within the uarch. So yes, benchmarking is the best way to go

.

Well, your A10-7850K is old enough to use DDR3, which means that if you are bandwidth bound you will REALLY hit the ceiling on that one.

Re: Friday Facts #204 - Another day, another optimisation

Posted: Tue Aug 22, 2017 1:12 am

by Ntropy

bobucles wrote:When multiplying and dividing extremely specific floating point decimals there is a very good chance you'll accumulate a "drift" from tiny rounding errors (reason number 907.0000000000000156 to hate floats). Why not use a simple list of zoom values? Or at least snap the zoom level when it should hit an integer.

Hello!

I have been testing the zooming system, making captures and measuring pixels, and the 1:1 zoom (F9 default) seems correct to me, (the distance between 2 parallel contiguous belts is 32 pixels), however, I can't set exactly a 2:1 ratio in order to see all the HR entities pixel perfect; if I hit zoom in seven times from 1:1 zoom, the distance between identical belts is 62 pixels, ¿should not be it 64 pixels?

It's not a problem for me, i'm just commenting it; if I want to make massive and beatifully pixel perfect screenshots I use the "/c game.take_screenshot{<parameter>=<value>,...}" command.

Maybe it would be useful that the HUD shows the zoom % for a couple seconds when you use zoom in / zoom out, to see if we are playing in a "good" ratio that improves quality

Also, I agree with the option of the list of a fixed zoom values in order to avoid using long decimals, right now, there are 12 zoom-in levels below 1:1 ratio, and 13 zoom-out levels above the 1:1 ratio (26 zoom levels total), the biggest zoom seems to be 0.25 ratio, and the smallest zoom is 3.125 ratio, maybe an idea is to reduce the number of zoom levels and use only "easy" ratios, for example: 0.25 / 0.5 / 0.75 / 1 / 1.25 / 1.5 / 1.75 / 2 / 2.25 / 2.5 / 2.75 / 3 / 3.25 / 3.5 / 3.75 / 4

Thank you for the game, for the level of dedication and attention to detail, and for the awesome FFF